AI Is Coming For Your Children

Con-men are flooding kindle with AI children's books. They could do permanent damage to childhood literacy.

The Internet is awash with cheap takes on what AI means for the future. From Terminator-inspired fears of military robots killing their operators to articles claiming AI is going to save education and rescue students (the latter features Bill Gates excitedly explaining how the OpenAI taught a chatbot to pass an AP test). Somewhere in between these two hype peaks is this story about an author who wrote “97 books” in 9 months using ChatGPT.

The real story is rather less exciting than you might think. The “author”, Tim Boucher, used an AI to generate dozens of 2-5,000 word “stories”, each with dozens and dozens of illustrations crudely rendered by Midjourney. He dumped these onto a webpage where people can pay $2.99 if they want to read thrilling stories like “Occupy A.I.”, where protesters square off against cops who look curiously like the bad guys from Spaceballs.

I don’t have much respect for Tim’s project, but its potential for harm is fairly minimal. All of his ‘works’ are geared towards adults. But there’s something more sinister lurking behind the florid headlines predicting AI doom or salvation. The robots are coming for your children.

I was keyed onto this story by a Reuters article about a “boom” in AI-written e-books on Amazon. The piece zooms in on a wannabe author named Brett Schickler, a salesman from Rochester who heard ChatGPT was capable of writing stories and decided he’d been given a new lease on his dreams: “The idea of writing a book finally seemed possible. I thought, I can do this.”

His book, “The Wise Little Squirrel: A Tale of Saving and Investing”, is a guide to financial literacy through the eyes of Sammy the Squirrel, who gets some credit for being the least deranged looking character featured in this article. This book, available in paperback only, is currently around #30,000 in Literature & Fiction for Children.

Late last year Folding Ideas, a YouTube channel run by researcher / writer Dan Olson, published an investigation into a group of Amazon con-artists who promise their followers big money if they take classes to learn how to mass-produce and sell low-quality books on Amazon KDP (Kindle Direct Publishing) and Audible. The topics of the books didn’t really matter, as long as they included keywords that corresponded to popular searches on Amazon. The writing was all done by barely paid ghostwriters. The goal was to trick enough people searching for real books on various topics into buying trash books that the whole endeavor turned a profit.

The main barrier in terms of both production time and profitability was the ghostwriting. A human being can only write so fast, even if they’re just re-wording a Wikipedia article. ChatGPT and other large language models provide an incredible opportunity for these hustlers to increase their output. There’s only one problem: getting ChatGPT to write an entire proper novel (50k+ words) is basically impossible right now. That’s why all of Tim Boucher’s books were just a couple thousand words long.

Children’s books are a different story (forgive the pun) because they are heavy on illustrations but light on text. Each page may only have a paragraph or two without looking suspiciously light. And sure enough, a brief Youtube search reveals video after video with titles like “EASY AI Money: Make $100k Writing Children’s Books with ChatGPT and Midjourney” and “Easy PASSIVE Income With ChatGPT and Midjourney Creating Children’s Books”.

I watched too many of these videos, all of which lay out the same strategy. The first step is to pick a prompt. An actual author might decide, ‘I want to teach children about the importance of conservation with a cautionary tale about thoughtless capitalization of natural resources’ or ‘I want to write a book about a bat raised by birds to show kids that bats are beautiful and complex creatures, not just spooky set dressings for vampire stories’. But chatbots and grindset influencers are both incapable of feeling things, or wanting to transmit their feelings to others.

Instead, the process of picking a topic for an AI children’s book is mechanical and bleak. It starts with a trip to Amazon’s sales rankings. An extension like BookBolt is used to show which topics are selling well without being too crowded by competition.

The most successful AI kids books will only sell dozens, or perhaps a hundred or so copies, but the people making these videos try to bait their hooks by showing sales calculations from successful books by human writers to make the case that AI kids books are an untapped goldmine:

The Zinny Studio notes that you want to copy the premises of real kid’s books that have several hundred reviews, but ideally less than a thousand. My guess would be that this is to avoid doing anything too high profile. You’re looking for a sweet spot where there’s a lot of demand but not too much attention.

There’s also a strategy for price points. The Zinny Studio points out that Amazon pays a 70% royalty rate for books that cost from $2.99-$9.99 but books under $2.99 only pay out 35%.

Some creators will take their research and use it to create an original premise, but for the most part they just collect keywords and ask the AI to generate a story premise, which they then feed back to it, thus avoiding the risk that human creativity might be involved at some point in the process.

There are only occasional weak jabs at encouraging original thought. One guide I found (268k views) was by The Zinny Studio. It cautioned viewers:

“You’re not doing research to find books to copy, you’re doing research to understand what is working. It’s important to make your own original pieces…so you can stand out from the competition.”

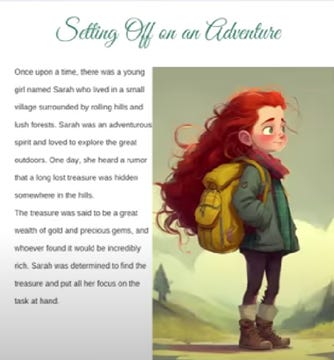

But here’s the actual prompt The Zinny Studio used to generate their example children’s book:

“Write me a children’s story about a girl going on an adventure to find a missing treasure. lesson ‘You achieve what you put your mind on’. Make it 6 pages and title each page. make is mind blowing and intriguing”

The spelling and punctuation mistakes are all theirs. As you can see, actual characters, a conflict and a plot are not necessary. This is sufficient to generate something that technically qualifies as a storybook:

“Page 1: ‘The Missing Treasure’

Once upon a time there was a brave and curious girl named Sarah. She lived in a small village at the foot of a big mountain. One day, she heard about a missing treasure that was hidden in the mountain a long time ago. Sarah was very intrigued and decided to go on an adventure to find the missing treasure.

Page 2: “The Journey Begins”

Sarah packed her backpack with food, water, and a map, and set out on her journey. She hiked up the mountain, and along the way, she met many animals who offered to help her. But Sarah declined their help, saying, “I want to do this on my own.”

Page 3: ‘The Cave of Trials’

After several days of hiking, Sarah finally reached the top of the mountain. There she found a cave that led deep into the mountain. She entered the cave and was met with many trials, including steep cliffs, deep chasms, and dark tunnels. But Sarah didn’t give up. She kept moving forward, always remembering the lesson she learned from her parents: “You can achieve what you put your mind to.”

You see what the machine is doing here. The prompt asked for an intriguing story so it described Sarah as “very intrigued”. The prompt asked for the story to include the lesson, “You achieve what you put your mind on”, so ChatGPT had Sarah remember that her parents told her that after she went through a series of “trials” that aren’t actually described.

I found an even lazier example of synthetic storytelling in a video by Christian Heidorn (120k views) whose YouTube channel is called Tokenized AI. He had Midjourney create what he calls a “comic book”, using an equation for story ideas that reads like the brain of every Netflix executive.

Christian decides to have the machine write him a comic book with Indiana Jones / Uncharted-style vibes. The vibes are purely a product of the (derivative, plagiarized) artwork, because the actual ‘plot’ here is nonexistent.

Looking at a bunch of these in a row pulls the curtain aside on AI storytelling. Much of the dialogue is utterly nonsensical- why is Leo saying “That’s my line!” in Scene 1? He’s never said that before in this story, it doesn’t really make sense as a joke. I can only guess that at some point a real person wrote a story with that line as a retort and whatever calculation ChatGPT ran to finish that page concluded it was probably appropriate.

Scene 5 shows us what happens when these things attempt to generate conflict. As a spoiler, Maya and Leo decide not to take the treasure, choosing friendship over greed. But at no point is it explained why they wouldn’t be able to have both. There’s no actual reason for them not to take the treasure. Leo just says, “But at what cost?” and the tale moves along.

What we have here is the simulacrum of a story, technical ingredients arranged in such a way that it satisfies the needs of someone whose only purpose is to trick overworked parents into buying a copy for their child, who will likely be too young to elucidate why something seems wrong with their new book.

Because the text generated by these AI programs is really just chopped and screwed together pieces of actual stories written by people, AI hustlers are very concerned with plagiarism. So they feed their text into apps like Grammarly to run test plagiarism detection scans. Inevitably, the story fails.

Worry not, there’s a simple solution: Quillbot. Billed by its creators as an “online paraphrasing tool”, Quillbot combs through a document and replaces the adjectives and verbs with synonyms (‘courageous’ for ‘brave’, ‘resided’ for ‘lived’ etc). Simply feed your Frankentext into Quillbot and you’ve got something that won’t trigger Amazon’s plagiarism sensors.

(It’s actually unclear to me how Amazon scans for plagiarism. I reached out to one of their PR reps and asked about their policies on plagiarism and AI generated books, but have not heard back at time of publication.)

Once they’ve got passable text, the next step is to create image prompts for all the ‘illustrations’ in the book. Again, there’s no room for creativity here. For each page they ask ChatGPT to generate descriptions of the images needed for that text. Then they feed that text into Midjourney, or Leonardo or some other AI image generator and use it to produce something like this:

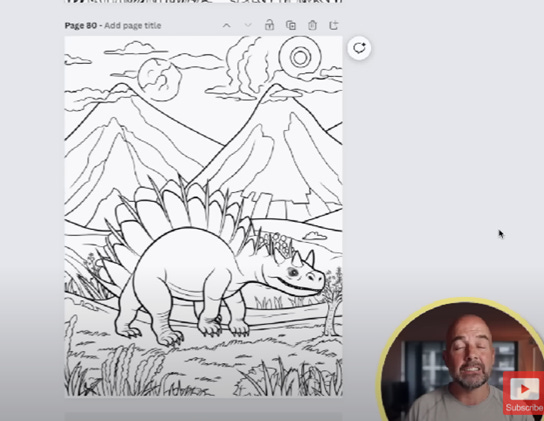

By the standards of AI children’s book art, this is actually pretty good. The worst-case scenario is shown in this video (412k views) by Paul Marles. Prior to the AI explosion he was teaching people how to con rubes through Amazon KDP the old-fashioned way. His videos had titles like “$9636 From 30 to 60 Minutes Work!! -Make Money Online With KDP Low Content Books”. He has dozens of books for sale on Amazon.

Paul ups the ante on laziness here by cutting out the text entirely and instead generating a children’s coloring book he hopes will SEO well enough (because it’s about dinosaurs) to turn a profit. From a half-assed prompt that misspells the name of the dinosaur…

…we get a series of off-putting and biologically inaccurate T-Rex’s, all of which have massive front legs instead of tiny arms. The claws are just as fucked up as AI hands tend to be, although Midjourney is smart enough to hide them, Liefield-like, in the top left image.

Paul’s guide also goes through how to lay out your coloring book for publication, cobble together a cover, and upload it to Amazon where they’ll ship physical copies off to whoever you can trick into ordering one.

My worry here is that the parents who fall for these books are likely to be overworked, with low budgets and little free time to vet purchases. They might just click on whatever is affordable. I also worry charities that provide poor kids with free books might do bulk orders of this junk because the price looks right. In our glorious AI future, rich kids get to color in proper dinosaurs while poor kids grow up thinking Stegosaurus had no tail and the earth used to have a second moon that looked like a human nipple:

Paul did respond to my request for comment. I asked if he was concerned that his coloring book or others like it might have a “negative impact” on children who received the coloring books. Paul did not answer this question. He claimed, “The images I used in the video were just examples and not to be taken as exact images I published.”

But a cursory search of Amazon shows numerous coloring books that look quite similar to the one Paul shows in his video. Here’s his book:

Here are several near identical copies all sold under different names. Note how some have missing legs, or extra legs, or distorted nightmare arms. All are clearly AI works.

I asked Paul if this was one of his or if he thought it was made by one of his viewers. He just said, “that is not my book”. Perhaps I should have sent along this cover, which features what I can only describe as a hybrid of a T-Rex and Salad Fingers:

Despite featuring easily the worst dinosaur drawing I’ve seen in my life, this coloring book appears to be selling reasonably well. At the time this article was written it was #75 in the Teen & Young Adult Drawing category.

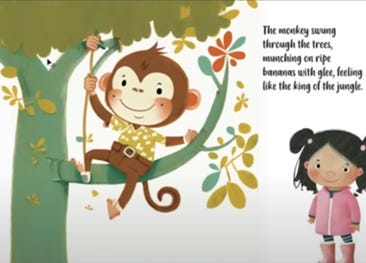

In addition to making 3-legged T-Rexes, most AI image generators struggle to keep characters consistent across multiple images within a single book. Most of the creators I followed just ignore this, trusting that what they put out looks close enough to fool someone skimming over the book. If the prompts are engineered carefully enough, sometimes different pictures look pretty close to right.

But that is rarely the case. The laziest example of this I’ve found is from “Treasures Beyond Gold”, the adventure comic book I described earlier. Christian Heidorn, the author, prefers very technical prompts for his images, but this still means that he’s just asking the machine to draw “attractive Western man” or “young Asian woman” on each page.

That results in a comic book where every page features totally different characters, often drawn in different styles. In the second image here she’s been anime’d up by at least 20% and he’s gone from looking like fanart of Dean Winchester to having like 15% Shaggy from Scooby Doo tossed into the mix.

The first of these pictures is supposed to show both characters bumping into each other in a Southeast Asian market while reading identical treasure maps. Not only is no map featured in the image, but it just looks like a head on portrait. There’s no visible evidence of either character interacting.

An adult will have no problem picking this out as just cheap weird shitty AI art. But these books could be quite damaging to little kids. A substantial body of research into emergent literacy (the reading and writing skills possessed by a child before they learn how to formally read or write) has shown that illustrations play a crucial role in transmitting the building blocks of literacy. One study on emergent reading strategies by Judith Lysaker and Elizabeth Hopper of Purdue University notes:

“Emergent reading practices such as wordless book reading are often seen as precursors to the meaning making that comes later during print reading.”

And that’s what actually scares me about Midjourney-generated kid’s books. Small children are info-vacuums, hoovering up observations about the world at a terrifying pace. We know that before they can read, little kids come to understand things like story structure and the meaning of words and phrases by studying the illustrations that accompany text. AI images are generated without any intent or understanding, and as a result they always look somewhat disjointed and off.

So the worry here is not just that parents get tricked into buying bad books. It’s that AI books are so incomplete and broken they might fundamentally damage the way young children acquire reading skills.

AI advocates insist that this is just version 3 or 4 or whatever of [App X], and the programs will only get better and better as time goes on. This is far from a guarantee. For one thing the popularity of these tools means more AI-generated crap will get spat out onto the Internet. Since new AIs will be trained on this content there’s a high chance of creating a feedback loop which can lead to what’s called model collapse. A group of researchers published in the open-access pre-print repository arXiv described this as what happens when the, “...use of model-generated content in training causes irreversible defects in the resulting models.”

I find this particularly worrisome because the present models are already full of defects. Take the storybook generated for a video called “I Create a Best Selling Children’s Book Using AI in Under an Hour”. Here’s the creator, Grayson Sands. I have reached out to him for comment but have not received a response as of publication. His sunglasses irritate me.

He decides to generate a book featuring a character based off a tattoo he got of a Triceratops playing a stand-up bass:

Midjourney did a decent job on the tattoo itself, but as you can see the world behind the character looks a bit…off.

This becomes more obvious in subsequent pages:

Now I can admit there’s something interesting and amusing about the hallucinatory nature of this art, but it has nothing to do with the story, which is about a Brachiosaurus (who plays piano for some reason) teaching a Triceratops how to play bass.

At the end of the video, Grayson tells us how he got his book to be a “best-seller”. It turns out he just called up a bunch of his friends and family and told them he’d written a book that was on Amazon and begged them to buy it. They did, and he shot up through the rankings (it doesn’t take a lot with print books) and started appearing higher in Amazon’s search results.

I can’t tell you how well this actually worked. At present “Dandy the Dinosaur” only has 2 reviews. Both are five stars, but it’s impossible to know if those are real or not. Even if Grayson’s effort to game the system was unsuccessful, other people with more resources, and an actual agenda, will find ways to succeed here. And that brings me to this fellow:

His name is Lucas Kitchen and based on the illustration for his book you’d guess it was about an old man and his dog getting murdered in the woods by a rabid unicorn.

It’s actually much weirder than that. Lucas is an Evangelical Christian who writes sci-fi books about proselytizing on Mars. His story prompt is easily the oddest one I’ve come across, although it does at least seem to have been written by a human.

“Write a children’s story where the protagonist is a little puppy named Fluff. Fluff wants someone to tell him about Jesus, but he can’t read John 3:15, so he needs a solution. The antagonist of the story is a bad unicorn. In the story include a field with trees. Also include magic in the forest. Also include a character who says “uhn uh” over and over.”

Lucas had his kids help him write the prompt. This is potentially a nice learning exercise to do with your children, provided they’re old enough to be thinking about things like story structure in more abstract terms. I can see kids learning from the effort to correct a deficient AI story and turn it into something engaging. But the ‘book’ Lucas generates with ChatGPT is as nonsensical and devoid of actual plot as the others we’ve seen.

“Once upon a time, in a magical forest with fields full of trees, there lived a little puppy named Fluff. Fluff was a curious puppy and he loved to explore the forest and learn new things.

One day, Fluff heard about a man named Jesus who was very special and had done many wonderful things. Fluff was very interested and wanted to learn more about Jesus, but there was one problem: Fluff couldn’t read.”

He even plays AI narration of the story along with the illustrations and records his kids reacting to it. They don’t seem impressed.

There’s nothing really sinister in Lucas’s story but it did provoke in me an unsettling vision: Churches and political organizations with axes to grind and money to spare, generating propaganda content and buying up copies to ensure Amazon’s algorithm spreads it far and wide. It’s a version of the current strategy used to sell autobiographies of politicians and celebrities.

Those books are generally targeted towards adults though. Kids are a lot easier to influence, or at least confuse, and Amazon has no vested interest in stopping Kindle from making money off propaganda for Kindergartners.

In Literature as Exploration, an influential book on educational literary theory, Professor Louise Rosenblatt argues that the reader is a crucial part of any piece of literature: “There is no such thing as a generic reader or a generic literary work; there are only the potential millions of individual readers or the potential millions of individual literary works. A novel or a poem or a play remains merely inkspots on paper until a reader transforms them into a set of meaningful symbols.”

So books, from Blood Meridian to Hop on Pop, are in part a dialogue between writer and reader. But the machines generating these stories cannot participate in a dialogue. They’re Mechanical Turks, guessing what word comes next based on a mix of complex math and the labor of Kenyan contractors paid less than $2 an hour to make sure the responses aren’t too racist.

This is a problem because real books teach empathy. It’s pretty common knowledge that reading long-form fiction makes you better able to identify with other people’s thoughts and struggles. Could being handed enough lazy AI slop in first grade not only impact your future literacy, but your capacity for empathy? There’s no real way to know yet and we have no idea how many kids will be exposed to how much of this stuff.

I reached out to Lucas Kitchen to ask if he shared my concerns about the way these AI-generated books might harm young readers. His book was not the worst offender, but its religious nature did set me on edge. To his credit he responded and gave a reasonably direct answer when I asked if he believed there was potential harm these books could do if allowed to flood Kindle en masse:

“…I wouldn’t say I’m worried, but I would say that I agree with you that there is a lot of crap that is being slung out by people who don’t yet understand their role in the AI generative production workflow. The book that I did was a quick, one hour demo, that would need real work done to it if it was going to be for sale.”

This at least counts as direct by the standards of his peers. Lucas insists that he would never publish a book as unfinished as the one he showed on YouTube. He expressed his belief that there would always be a need for the “artist’s eye, decent editing, a cohesive idea and a vision for what they want to create”. But his attitude seemed to be that the people crapping out disjointed AI kid’s books onto Kindle were just lazy, rather than predatory.

“They jumped into the AI generative world, thinking that they could produce something decent while being lazy. That isn’t the case. It is still hard, artist work to get something that is great. I think that the generative AI model has simply raised the bar for what we will consider great. I think it is advancing art and writing in an amazing way, And the expectations for what we consider good writing and good art will be next level from now on.”

Image added here for no particular reason.

On the whole I have found this investigation dispiriting and worrisome. The upside is that I have learned a lot about the science of building literacy in little people. Education researchers have found that very young children can be influenced towards new behavior by the stories they read. From an article by Peggy Albers in The Atlantic:

“Stories can be used to change children’s perspectives about their views on people in different parts of the world. For example, Hilary Janks works with children and teachers on how images in stories on refugees influence the way refugees are perceived.

Kathy Short studied children’s engagement with literature around human rights. In their work in a diverse K-5 school with 200 children, they found stories moved even such young children to consider how they could bring change in their own local community and school.”

In that last case, students were so moved by the story of anti-child labor activist Iqbal Masih, who was murdered at age 12, that they decided to create a community garden to donate food to a local food bank. Are AI stories capable of inspiring positive actions like that? What about negative ones?

Nobody knows. But we’re all going to learn the answer together, whether we want to or not.

As a teen librarian, I’m so glad you’re talking about this. Children’s books are some of the hardest literature to write well. Good children’s authors understand stuff like early childhood development, even if that understanding is unconscious. A key part of linguistic development is understanding how to tell a story. You see it in how young kids will be a mess when telling a story, even stories like “what did I did today at school”. They’ll mix up the timeline, not be able to distinguish between unnecessary details and key moments, forget to introduce characters, etc. Try asking a 7 year old to summarize a movie they’ve seen recently and you’ll see what I’m talking about. Instead of saying “Stars Wars is about the Empire and the Rebels fighting for the galaxy” they’ll say stuff like “there’s this boy called Luke and he wants to go to the town but first he has to do his chores and this princess gave a secret to some robots and… and…” This isn’t just important for knowing how to create art, it’s vital for stuff like figuring out the main idea of a passage in a textbook. Kids learn how to process information by reading well-written stories.

As a parent this stuff is driving me crazy. It was already bad enough avoiding the garbage on YouTube Kids (pregnant Elsa, Peppa Pig dental torture), and it's getting worse on the reg. And honestly, between this and the ability to easily sell fraudulent electronics on Amazon (and the labor issues! But no one in the government cares about that), I really wish regulators would go after them for real.